How Does an Intelligence Explosion Impact the Future of Cybersecurity?

You may have missed the earth shattering predictions that a group of very smart humans published two weeks ago: the AI 2027 report. I have read their elaborate scenario and watched this three hour discussion from two of the authors.

First of all, who are these people that are predicting the potential demise of the human race in less than three years? Scott Alexander is a successful blogger in the world of tech. His blog here on Substack is Astral Codex Ten. Here are his comments on AI 2027 from ten days ago.

Daniel Kokotajlo could be called an AI ethicist. He left OpenAI and refused to sign the onerous non-disparagement agreement leading to OpenAI rescinding that requirement for all employees. He writes here.

Like most scenario planners, they started with today and projected forward based on how we got here. That is what I want to focus on. These technologists understand AI deeply and are well positioned to make predictions on its rapid increase in intelligence based on the breakout developments of the last 3 years and what is happening now.

Before I begin, I take note of AI skeptics like Gary Marcus who essentially claim the exact opposite course trajectory for LLMs. He claims LLMs have been maxed out and are struggling to improve.

The entire AI 2027 scenario hinges on one thing: that the engineers responsible for improving models at OpenAI, Anthropic, Google, and DeepSeek will use existing models to assist them, as indeed they are already. This will progress until they have created AI research assistants that are smarter than our smartest humans. By 2027 there will be "superhuman AI researchers.”

After that, AI intelligence grows so fast that super intelligence will appear to have happened overnight. Alvin Toffler and Adelaide Farrell will be nodding their heads at the level of disruption this will cause. “See, this is what we were talking about in Future Shock.”

Rather than delve into the team’s predictions about mis-alignment—evil AIs wiping out the human race—I want to talk about the implications for our comparably mundane world of cybersecurity.

This scenario is based on rapid increase in intelligence in AIs with super-intelligence only two years away.

Of the 96 “AI Security” vendors tracked in the IT-Harvest Dashboard, 15 are in a category I call SOC Automation. The concept is simple: alert triage will be handled by AI agents.

There have always been two sides to cybersecurity:

The protective side represented by firewalls, hardened configurations, multi-factor authentication, and encryption—things that either stop attacks altogether or dramatically increase the costs for the attackers.

The detective side. This is where the SIEM comes into play. Everything that can be is instrumented to report what is happening. Logs and alerts are funneled into a centralized SIEM where they are prioritized based on algorithms. SOC analysts use analytic tools to “hunt” down the causes of the tiny, tiny fraction of alerts that they have time for and take actions to stop an ongoing attack or clean up after an attack.

(Yes, there is a third side to cybersecurity, compliance, which seeks to demonstrate that the protective and detective sides are deployed and working.)

The other types of “AI Security” include Guardrails, like Knostic, Arthur, and Credal and Model Protection like Protect AI, and Prompt Security.

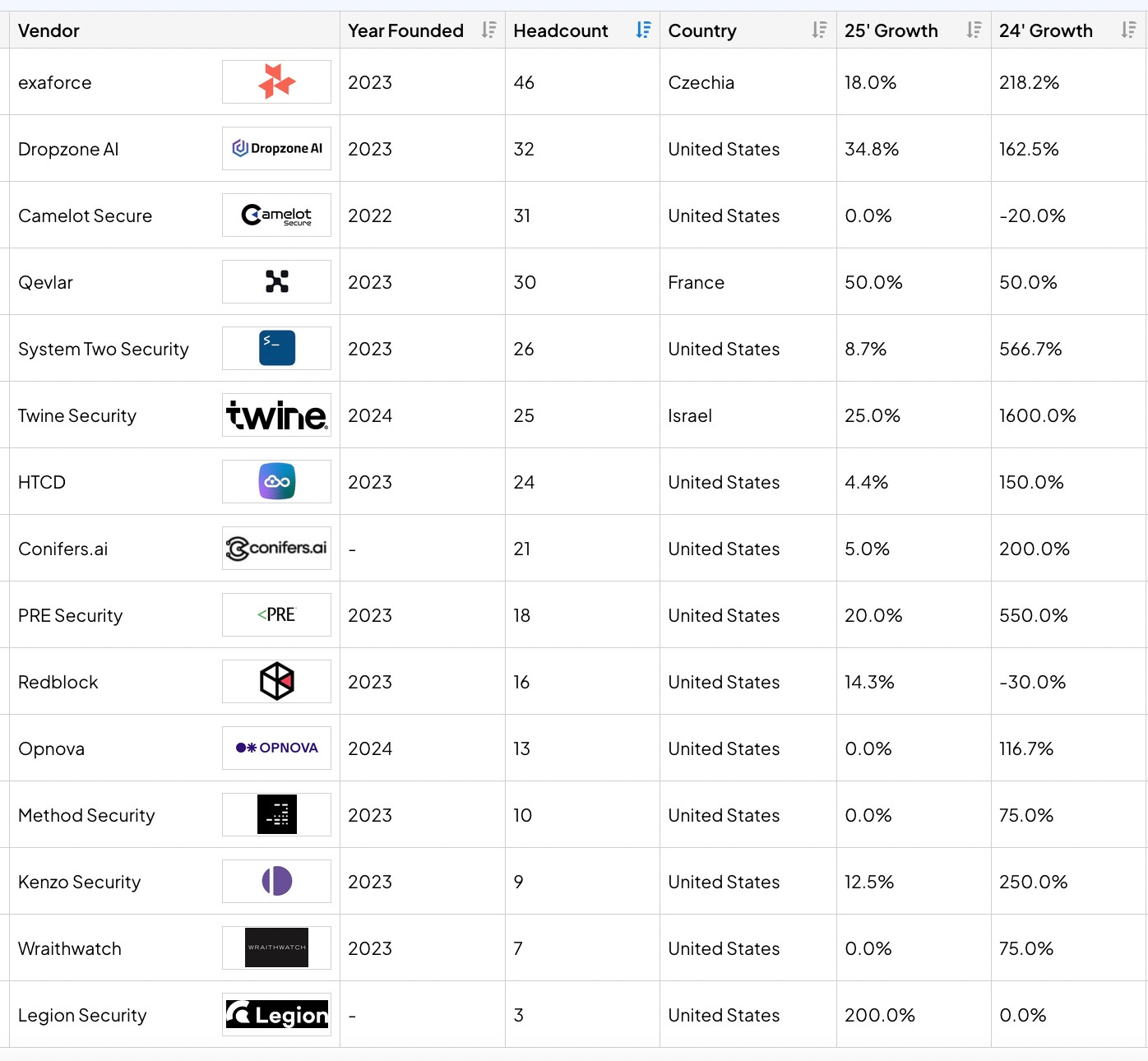

Here is a list of the vendors who are focused on AI agents for SOC automation.

Note that they are mostly two years old and have very small head counts. Note also that the biggest, Exaforce, just announced a $75 million series A investment from Khosla Ventures and Mayfield.

You may think the press release over-hypes their “Agentic SOC Platform that combines AI agents (called “Exabots”) with advanced data exploration to give enterprises a tenfold reduction in human-led SOC work, while dramatically improving security outcomes.” But you would be wrong.

Meanwhile this past week Torq acquired an Israeli startup that was still in stealth. Ofer Smadari, CEO, said: “By integrating Revrod into Torq HyperSOC 2o, our most advanced platform yet, we’re delivering the world’s first OmniAgent: a robust, collaborative, AI-driven system that autonomously investigates, triages, and remediates threats with near-human-level analysis and precision.”

I believe that while AI agents may just barely work today, by the end of the year they will work so well that most of these 15 vendors will experience skyrocketing sales. Only those that attract enough investment will be able to scale to meet the demand.

Here are more predictions based on the concept of the intelligence explosion described in AI 2027. Keep in mind that we are talking about the biggest technological shift in our lifetimes; bigger than the internet, mobile computing, virtualization, and cloud computing. So there are going to be outsize changes in the landscape.

-By the end of 2026, 95% of all SOCs will use AI agents including those of MSSPs. In other words, most medium to large companies will see a dramatic decline in their security spend and will no longer need a large percent of their security teams.

-More than 50% of SMBs will subscribe to automated detection and remediation from new suppliers.

-At least 50 new SOC automation startups will come out of stealth yet this year.

-Several of these companies will have billion dollar valuations before the end of 2026. Not a stretch considering that Protect AI, one of the AI model protection companies, is reportedly being acquired by Palo Alto Networks for between $600 and $750 million.

-One of these companies will have a billion dollars in revenue in 2027. That’s right. Faster growth than Wiz ever saw.

-The era of a target rich environment for attackers will be over next year. Run of the mill cyber crime will be over.

-The costs for attackers will skyrocket. Only nation states will have the resources to devise attack methods that will bypass AI defenses. It will be cheaper to infiltrate a target with human spies than it will be to devise a cyber attack that can penetrate the AI defenses.

I have always found it a valuable exercise to recognize when massive change is on the horizon and predict the impact. Everyone in the security industry should be evaluating the advent of super intelligence and making plans to take advantage of it.

Additional learning.

Karl Shulmann Podcast with Dwarkesh Patel

Leopold Aschenbrenner’s Situational Awareness: The Decade Ahead. 165 pages.

Cybernomics Podcast with Josh Bruyning.

Jason Clinton, CISO of Anthropic warns fully AI employees are a year away.

Thomas Ptacek knows of what he talks. My AI Skeptic Friends Are All Nuts.

Great insight! The intelligence explosion will bring major cybersecurity advancements but also greater risks, demanding constant innovation, adaptation, and vigilance.

https://codeguardian.ai/