A Survey of Deep Fake Detection Tools

Last week The Wall Street Journal reported that the White House chief of staff’s phone was apparently “hacked” and some of her contacts received calls that could have used AI to imitate her voice.

Leaving aside the natural distrust you may feel for politicians and celebrities when they claim “their phones are hacked,” there is no question that faking voice and video is now almost trivial. I have had several phone conversations with AI sales bots that were almost indistinguishable from humans.

Here is a video+voice generated from a text prompt to Google Veo3.

Just for fun, here is another.

There have been other more sensational stories of deep fakes. The most cited is from this February. “Finance worker pays out $25 million after video call with deepfake ‘chief financial officer”. I am not sure I believe that one but you can imagine it working since there are plenty of scams that people fall for with just email.

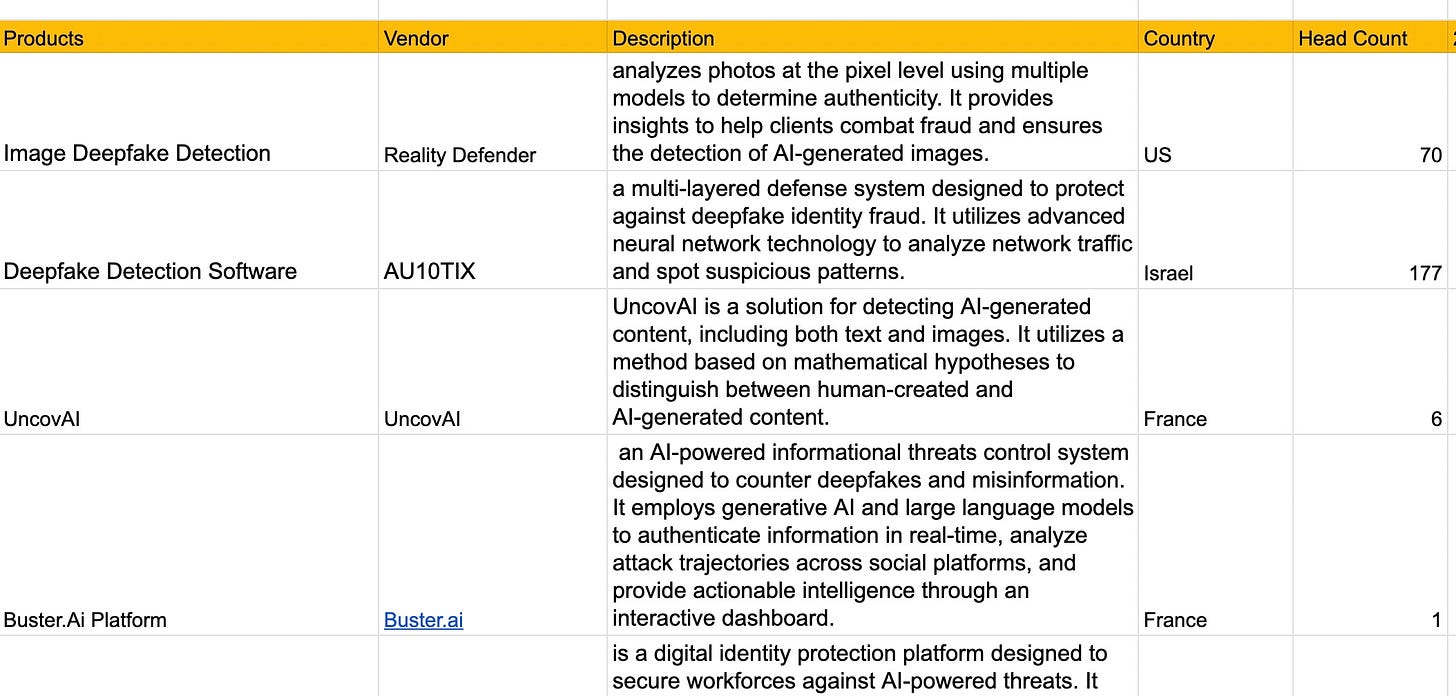

The threat is giving rise to many approaches to counter deep fakes. A quick search of dashboard.it-harvest.com reveals 28 products from 21 vendors.

Here is a link to a Google Sheet listing them.

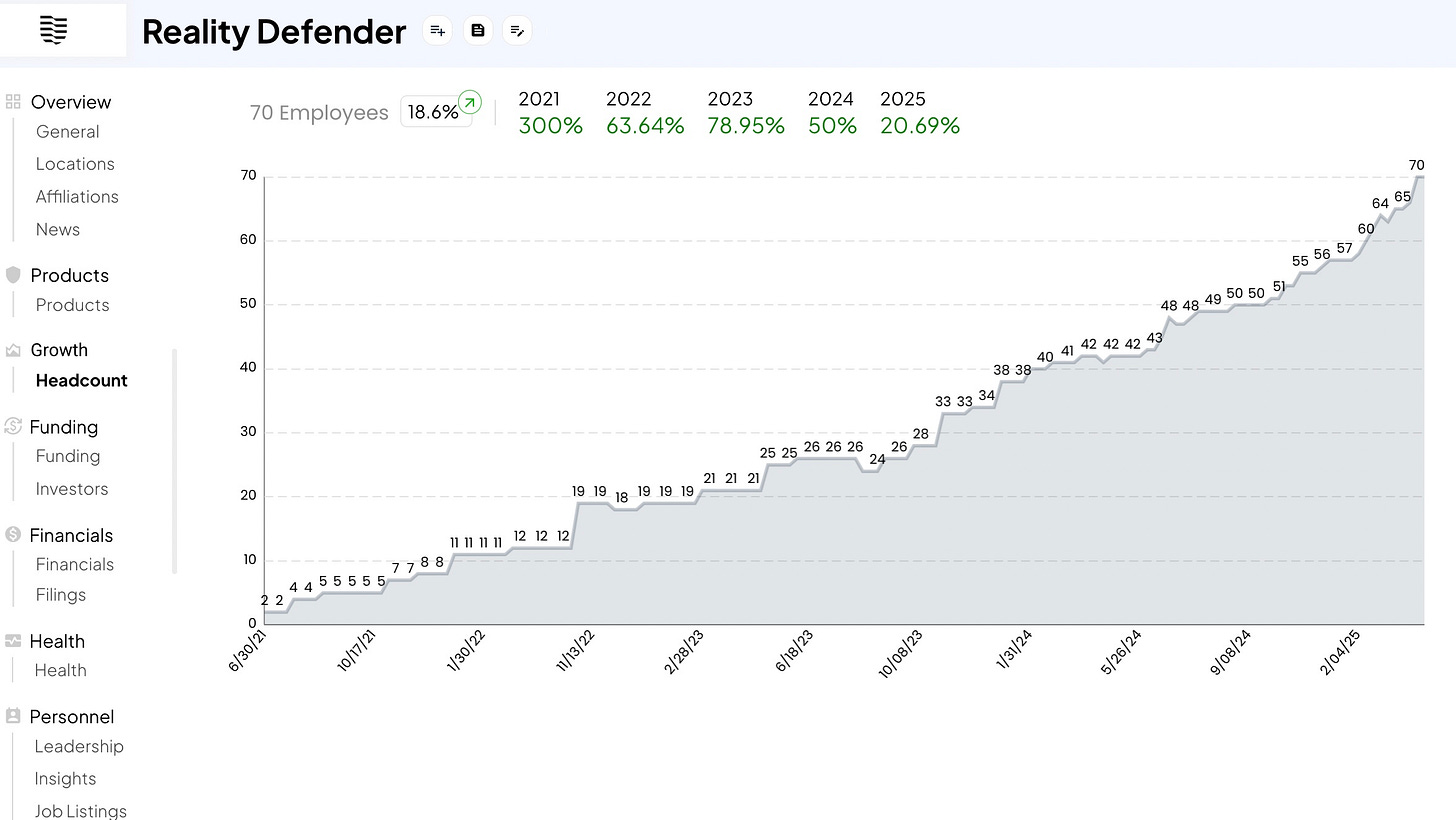

Of the pure-play deef fake defense companies Reality Defender is leading with significant headcount growth and funding. Note they are a Cyber 150 company.

Another pure-play is Cyabra, about the same size as Reality Defender but one fourth the funding level to-date.

Outside of pure-play deep fake detection are identity verification providers that were already working on the problem of fake identities for fraud prevention. They are well positioned in this space. Pindrop, Yotim and Incode are all great examples of this. They are all much bigger than the startups.

There is no question that deep fakes are a real and present danger and this market will grow dramatically. The identity verfication vendors may have to acquire the pure-plays. The large platform vendors (looking at you Twitter, Facebook, etc.) will have to deploy technology to identify deep fakes and they may acquire one of these vendors too.

The intersection of a deep fake with the "remote IT worker" scams could be a serious threat. Today, foreign actors create fake resumes at job sites and have begun to pay parties to do zoom interviews. The parties follow a script or are instructed how to respond in real time. Is it a stretch to imagine an AI agent in this role?